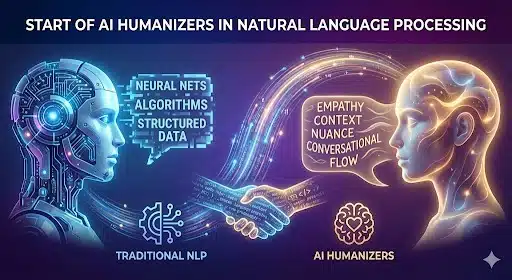

The quick development of Natural Language Processing (NLP) opens up many applications based on understanding and creating human language. One growing area within this is the development of AI humanizers, sophisticated systems meant to make AI-generated content more human-like.

Especially in educational settings, this introduction investigates the basic notion of AI humanizers and sets the stage for a more complete study of their purpose, underlying technologies, problems, and effects.

AI humanizers are sophisticated algorithms run inside NLP systems that modify the production of artificially generated text to closely resemble human style and speech. The need for such systems has grown with the growing use of automated material in educational and professional environments, where the line between human and machine-generated content can be important.

These tools change certain linguistic features including syntax, vocabulary, and stylistic nuances, therefore decreasing the detectability by AI detectors that schools, colleges, and other organisations sometimes employ to uphold standards of academic integrity.

Natural language processing (NLP) systems use sophisticated algorithms known as AI humanizers to change the output of AI-generated content so that it closely matches human enunciation and style. Such systems have become more urgently required in professional and educational settings where automated material is increasingly utilised and where differentiating between human and machine-generated material is critical.

These techniques make automated content more difficult for AI detectors, often used by colleges, universities, and other companies to keep academic integrity standards to identify certain textual features like syntax, lexicon, and nuances of style.

The creation and use of artificial intelligence humanisers provide insight into the delicate balance that must be struck between advancing technology capabilities and moral issues like academic integrity and transparency.

Emphasising their influence and application in educational settings, we will explore in the chapters that follow how these systems operate, the key technologies enabling them, the challenges developers encounter, and the possible applications of AI humanizers in the future.

How artificial intelligence humanizers help defeat detectors

By effectively concealing the mechanical fingerprints of AI-produced information, AI humanizers help preserve academic honesty by increasing the difficulty of AI detectors to differentiate between human and synthetic results. These sensors are meant to look for signs of AI involvement in text, including regular sentence structure, particular repeating patterns, or strange language more typical of machine learning algorithms.

They are often constructed on complicated algorithms themselves. By enhancing the human-like features of AI text outputs, AI humanizers help preserve the utility of AI tools in educational contexts without going beyond ethical limits.

Usually disclosing machine authorship, an AI humanizer works by analysing and changing several elements of text generation. They can change the style to reflect typical human errors and slang, vary the length of sentences, and employ synonyms to prevent repeating the same words. By following the multiple writing styles common of certain human authors, this complicates AI detectors even more by making the material appear more naturally written.

AI detectors’ level of complexity has driven the parallel development of AI humanizers. Humanizers must evolve at the same rate detectors get better at recognising content produced by artificial intelligence so that they stay effective. This cat and mouse game shows a significant technical clash whereby one’s skills impact the creation of the other.

Furthermore, although the main work of artificial intelligence humanizers appears to center around deception, it’s absolutely necessary to understand their utility in maintaining the educational worth of projects and assignments.

They see to it that rather than just being a means of creating maybe unethical work, artificial intelligence tools continue to be used as assistive technology assisting students in learning and understanding.

Breaking apart the several levels of AI applications, we will keep exploring the systems on which these AI humanizers run in order to render AI-created material indistinguishable from human writing.

Major Technologies Behind AI Humanizers

Among other ground-breaking techniques, AI humanizers use deep learning algorithms best suited for natural language processing jobs to enhance the human-likeness of AI-produced content.

These technologies enable machines to produce results that dramatically match the style and content of human-written text by focusing on understanding and simulating human language patterns in hitherto unimaginable manners.

Because they naturally produce coherent and contextually relevant text using vast volumes of human writing gathered as training data, transformer-based models like OpenAI’s GPT (Generative Pre-trained Transformer) lie at the center of these AI humanizers. Deep neural networks paired with attention mechanisms forecast and generate text sequences that are contextually rich and stylistically varied in addition to grammatically accurate.

AI humanizers have raw processing power similar to transformers but also use techniques like adaptive learning, stylistic transfer, and sentiment analysis. Style transfer involves changing the writing style of the product to match a desired tone or formality level so as to create content appropriate for human readers in academic settings. Ensuring that the emotional tone of the writing matches the desired message, sentiment analysis gives automated content a more human-like quality.

Adaptive learning algorithms enable artificial intelligence humanizers to learn from criticism and constantly enhance their output, therefore closing the gap between human and machine-created writing even further. This capability lets the artificial intelligence adapt to shifting language usage patterns and emerging writing styles, hence guaranteeing that its results go unnoticed over time—especially important.

Combining these technologies, AI humanizers not only fool AI detectors but also boost the caliber of the writing by generating realistic, intriguing outputs that seem to have come from humans. Combining these techniques forms the basis of AI humanizers, providing a robust structure that helps the difficult process of humanizing AI writing in a range of domains, notably education.

Chief Difficulties and Solutions in Creating AI Humanizers

Producing effective artificial intelligence humanizers has several challenges, first among which is the requirement to strike a compromise between raising text human-likeness and maintaining content veracity. One of the major worries is guaranteeing that the changes made by artificial intelligence humanizers do not impair the factual accuracy or original goal of the content. Where data integrity is paramount, this is especially vital in academic settings.

Engineers use sophisticated machine learning models that can understand and keep the main meanings and facts while changing the stylistic and grammatical components of the text to get over these obstacles. Ensuring consistency in the humanization quality across many content kinds and topics is another big challenge; this requires a flexible approach in the design of the algorithm.

Furthermore, using artificial intelligence humanizers has ethical consequences that need to be thoughtfully considered. Rules and standards for the correct use of these technologies need be created to help to ensure that they are correctly applied. Appropriately, methods should be in place to show how AI humanizers are used in course materials. Transparency is really vital.

Another real issue arises from artificial intelligence detectors’ ongoing development. Keeping ahead of the curve as these technologies develop calls for regular updates of artificial intelligence humanizers. Understanding the most current AI detection methods and modifying the humanizer technologies appropriately calls for continuous research and funding.

Continuous collaboration among ethicists, engineers, and educators is needed to develop sustainable, ethically sound, and efficient AI humanizers overcoming these challenges. This collaboration supports the complete realization of AI humanizers’ possibilities while maintaining the highest levels of ethical accountability and scholastic integrity.

AI humanizers in academics: Applications and trends going forward

The usage of AI humanizers in academia is predicted to increase greatly as educational institutions include more digital technologies and artificial intelligence into their programs. One of the main future developments is the growing personalisation of AI humanizers to match specific academic topics and writing styles, therefore increasing their reliability and efficacy. This might call for a more complex approach by which humanizers alter their work from the humanities to the sciences depending on the subject matter, therefore ensuring correctness and compliance with academic criteria.

Beyond customizing, we might see evermore aggressive artificial intelligence humanizers who not only adjust to avoid detection but also actively engage in the educational process. For instance, these technologies may combine the roles of writing aids and humanizers by providing pupils with helpful criticism or ideas on how to improve their writing style.

Moreover, as artificial intelligence applications gain ground in education, more focus will be given to the creation of ethical guidelines and laws governing their use. Establishing precise limits and criteria for when and how these tools can be used will probably be required to make sure they support educational goals without compromising academic honesty.

Integrating artificial intelligence humanizers with other educational tools including learning management systems and plagiarism detection systems could produce more thorough learning environments. Through this integration, AI humanizers would be instrumental in encouraging moral and effective communication by facilitating a seamless transition between the learning, writing, and assessment procedures.

As universities and legislators grapple with the advantages and drawbacks presented by artificial intelligence, the growth and moral use of AI humanizers will remain a major topic. It will be imperative for these technologies to transparently and responsibly satisfy academic goals if they are to be embraced and effective in such contexts. The direction these technologies grow and where they finally fit in the educational system will keep to be impacted by the interaction among developers, teachers, and legislators.

Conclusion, the influence of artificial intelligence humanizers on educational honesty

In the field of natural language processing, the creation of AI humanizers is a notable technical innovation with significant repercussions for educational integrity. Making AI-generated material more human-like is one way these methods help to maintain justice in academic settings.

Using these technologies, they ensure that pupils may benefit from the advancements in artificial intelligence, so enhancing learning and output while maintaining the moral principles expected in educational contexts.

Still, using artificial intelligence humanizers creates challenging ethical issues. Finding a middle ground between utilizing artificial intelligence to enhance education and guaranteeing it doesn’t foster dishonesty or fraud is challenging.

Although artificial intelligence humanizers in education seem to have a good future, they will require ongoing adaptation and careful management. As AI technologies advance and become more ingrained into the educational system, requiring changes in technology, ethical standards, and educational rules, the function of AI humanizers will likely become more critical.

To sum up, though artificial intelligence humanizers have drawbacks, there are still plenty of opportunities to enhance learning experiences and results. Their design and use will certainly be subject of great debate and dynamic development in the quest to protect and enhance academic integrity.

Frequently Asked Questions

Table of Contents

They are NLP algorithms designed to rewrite AI-generated text, adjusting syntax and style to make it sound indistinguishable from human writing.

They remove robotic patterns by varying sentence structure, using synonyms, and mimicking human nuances (like slang or minor errors) to hide the machine’s “fingerprints.”

They use Deep Learning, Transformer models (like GPT), Style Transfer to adjust tone, and Sentiment Analysis to ensure emotional consistency.